Picture taken by Tina Lin

Hi, I'm a third year PhD student at Stanford University, advised by Professor Shuran Song and part of the REAL lab. Previously, I was an undergraduate student at Columbia University studying Computer Science and Applied Math.

My work focuses on robot perception and manipulation. More specifically, I'm interested in developing methods for embodied agents to better perceive and understand the environment through multimodal data (e.g. vision, language, audio), which facilitates learning of robust and generalizable policies.

Updates

- Feb 2025 Excited to be joining the Embodied AI team at Meta as a summer intern in Menlo Park!

- Aug 2024 My second PhD project ManiWAV is accepted by CoRL 2024!

- Jan 2024 Excited to be joining the Robotics team at TRI as a summer intern in Cambridge!

- Aug 2023 My first PhD project REFLECT is accepted by CoRL 2023!

- Sep 2022 My undergrad project BusyBot is accepted by CoRL 2022!

- Sep 2022 Promoted by Prof. Shuran Song to be a first-year PhD student in her lab 🐻 Thanks Columbia for the Presidential Fellowship:)

- May 2022 Graduated from Columbia University (SEAS) with a BS in Computer Science 🦁️

Research

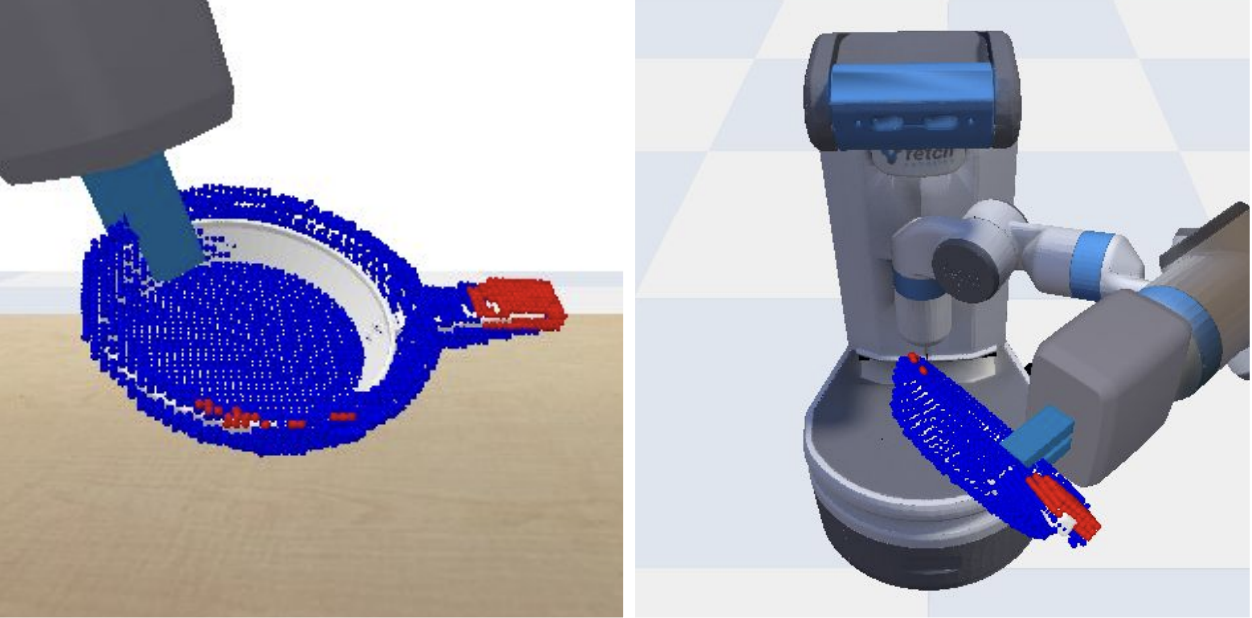

Geometry-aware 4D Video Generation for Robot Manipulation

Geometry-aware 4D Video Generation for Robot Manipulation

Zeyi Liu

,Shuang Li

,Eric Cousineau

,Siyuan Feng

,Benjamin Burchfiel

,Shuran Song

International Conference on Learning Representations (ICLR), 2026

ICLR 2026 Workshop on Efficient Spatial Reasoning

Website •

ArXiv •

Code

TL;DR: A 4D video generation model that enforces geometric consistency across views to generate spatio-temporally aligned RGB-D sequences, enabling downstream applications in robot manipulation tasks via pose tracking.

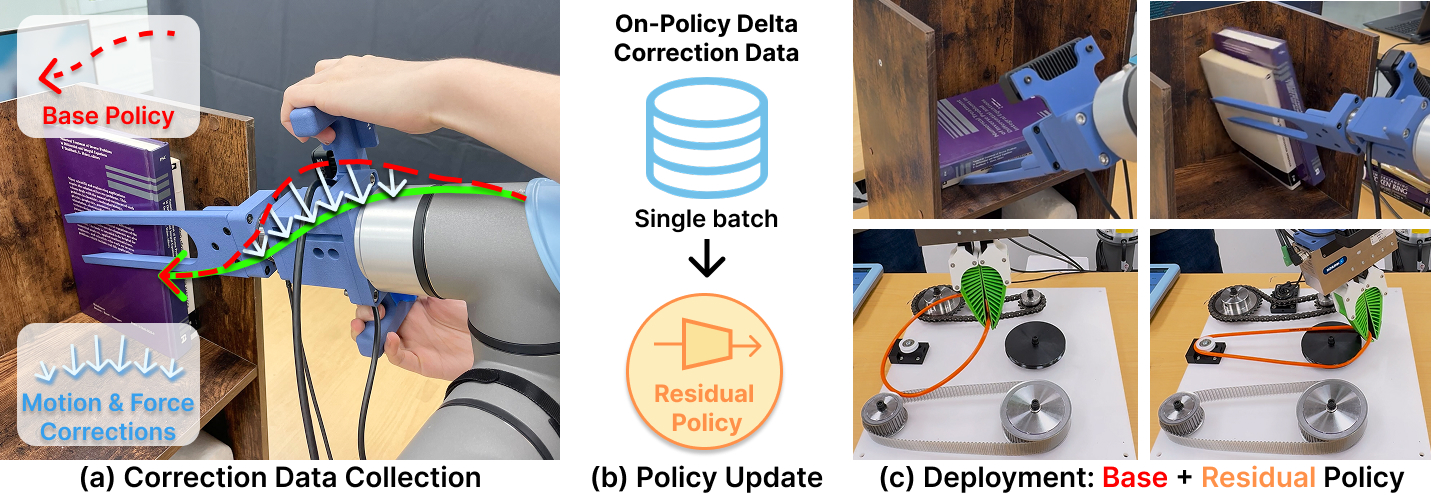

Compliant Residual DAgger: Improving Real-World Contact-Rich Manipulation with Human Corrections

Compliant Residual DAgger: Improving Real-World Contact-Rich Manipulation with Human Corrections

Xiaomeng Xu*

,Yifan Hou*

,Chendong Xin

,Zeyi Liu

,Shuran Song

Neural Information Processing Systems (NeurIPS), 2025

CoRL 2025 Workshop on Sensorizing, Modeling, and Learning from Humans

(Best Paper Award)

Website •

ArXiv •

Code

TL;DR: Improving contact-rich manipulation with a compliant residual policy learned from on-policy delta human correction.

Adaptive Compliance Policy: Learning Approximate Compliance for Diffusion Guided Control

Adaptive Compliance Policy: Learning Approximate Compliance for Diffusion Guided Control

Yifan Hou

,Zeyi Liu

,Cheng Chi

,Eric Cousineau

,Naveen Kuppuswamy

,Siyuan Feng

,Benjamin Burchfiel

,Shuran Song

IEEE International Conference on Robotics and Automation (ICRA), 2025

ICRA 2025 Workshop on Learning Meets Model-Based Methods for Contact-Rich Manipulation

(Best Paper Award)

Website •

ArXiv •

Code

TL;DR: Levergae diffusion model to learn adaptive compliance control for contact-rich tasks from vision and force feedback.

ManiWAV: Learning Robot Manipulation from In-the-Wild Audio-Visual Data

ManiWAV: Learning Robot Manipulation from In-the-Wild Audio-Visual Data

Zeyi Liu

,Cheng Chi

,Eric Cousineau

,Naveen Kuppuswamy

,Benjamin Burchfiel

,Shuran Song

Conference on Robot Learning (CoRL), November 2024

CoRL 2024 Workshop on Mastering Robot Manipulation in a World of Abundant Data

(Oral Presentation)

Website •

ArXiv •

Video •

Code •

MIT Tech

Review

TL;DR: A data collection and policy learning framework that learns contact-rich robot manipulation skills from in-the-wild audio-visual data.

ContactHandover: Contact-Guided Robot-to-Human Object Handover

ContactHandover: Contact-Guided Robot-to-Human Object Handover

Zixi Wang

,Zeyi Liu

,Nicolas Ouporov

,Shuran Song

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), October 2024

Website •

ArXiv •

Code (coming soon)

TL;DR: A robot to human handover system that leverages human contact points to inform robot grasp and object delivery pose.

REFLECT: Summarizing Robot Experiences for Failure Explanation and Correction

REFLECT: Summarizing Robot Experiences for Failure Explanation and Correction

Zeyi Liu*

,Arpit Bahety*

,Shuran Song

Conference on Robot Learning (CoRL), November 2023

CoRL 2023 Workshop on Language and Robot Learning

(Oral Presentation)

Website •

ArXiv •

Video •

Code

TL;DR: A framework that leverages LLM for robot failure explanation and correction, based on a hierarchical summary of robot past experiences generated from multisensory data.

BusyBot: Learning to Interact, Reason, and Plan in a BusyBoard Environment

BusyBot: Learning to Interact, Reason, and Plan in a BusyBoard Environment

Zeyi Liu

,Zhenjia Xu

,Shuran Song

Conference on Robot Learning (CoRL), December 2022

Website •

ArXiv •

Video •

Code

TL;DR: A toy-inspired simulated learning environment for embodied agents to acquire object manipulation, inter-object relation reasoning, and goal-conditioned planning skills.

* indicates equal contribution

Workshops

ICRA 2025 1st Workshop on Acoustic Sensing and Representations for Robotics • RoboAcoustics@ICRA 2025

ICML 2025 Building Physically Plausible World Models • Website

CoRL 2025 Robotics World Modeling • Website

Teaching & Outreach

I hold a passion for teaching and empowering minorities in academia/tech industry.

- Winter 2025 EE 381 Sensorimotor Learning for Embodied Agents @ Stanford

- Fall 2022 COMS E6998 Topics in Robot Learning @ Columbia

- Spring 2021/Fall 2021/Spring 2022/Spring 2023 COMS W4733 Computational Aspects of Robotics @ Columbia

- Fall 2020 COMS W4701 Artificial Intelligence @ Columbia

- 2021-2022 Academic Chair for Womxn in CS @ Columbia (WiCS)

- 2019-2021 Treasurer for Womxn in CS @ Columbia (WiCS)